The Evolution of Database Design

Database schema design is the blueprint of modern software architecture. A poorly designed schema leads to data anomalies, slow performance, and scalability bottlenecks. Traditionally, normalization—the process of organizing data to reduce redundancy and improve data integrity—was a manual, painstaking task requiring deep theoretical knowledge of relational algebra. However, the advent of Artificial Intelligence has revolutionized this phase of development. AI normalization optimizes a database schema by progressively refining its structure to eliminate inefficiencies and ensure robust data integrity.

Understanding AI Normalization

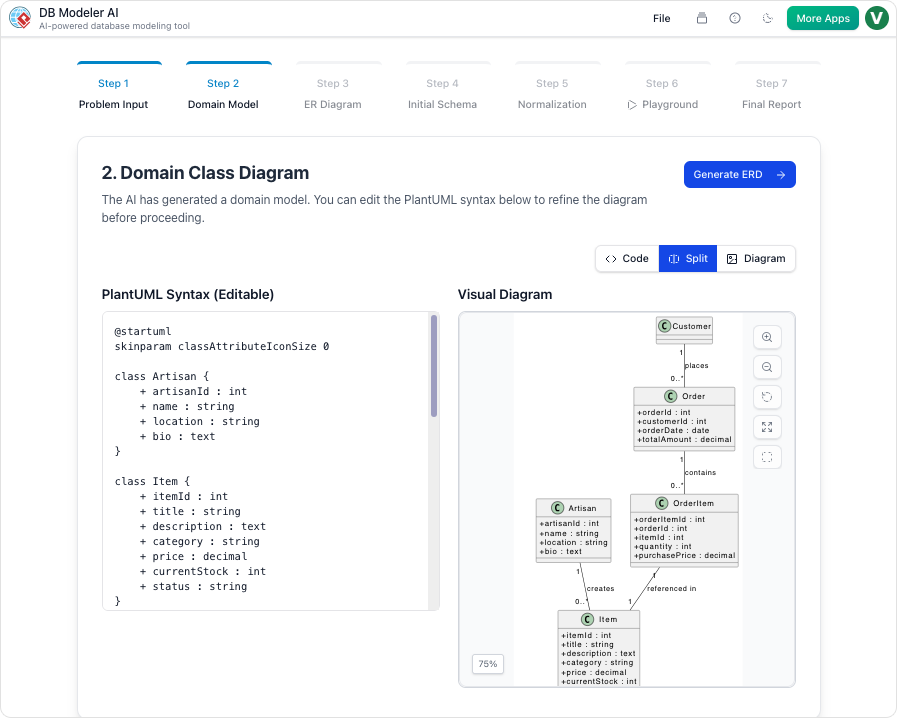

At its core, AI normalization acts as an automated architect. In advanced platforms, such as the DB Modeler AI workflow, this occurs as an automated “Intelligent Normalization” stage (specifically designated as Step 5 in the workflow). The AI analyzes a conceptual data model and systematically reorganizes it to adhere to industry standards.

The Filing Cabinet Analogy

To visualize this process, imagine decluttering and organizing a messy filing cabinet. In a disorganized system, a customer’s contact information might be scribbled on twenty different project folders. This represents data redundancy. If the customer moves, you must update twenty separate files, increasing the risk of error. AI normalization acts as a professional organizer: it identifies these duplicates, creates a single master file for the customer, and inserts a simple “reference key” in the project folders pointing back to that master file. This methodology saves storage space, accelerates search capabilities, and ensures that a single update reflects across the entire system.

The Core Mechanisms of Optimization

The optimization process within AI-driven modeling tools functions through several sophisticated mechanisms designed to bridge the gap between rough concepts and production-ready structures.

1. Stepwise Progression (1NF to 3NF)

The AI does not merely patch the database; it systematically reconstructs it. The engine advances the schema through the First (1NF), Second (2NF), and Third (3NF) Normal Forms. This stepwise progression ensures that the database structure adheres strictly to relational modeling principles, eliminating repeating groups and ensuring that non-key attributes are dependent on the primary key.

2. Redundancy Elimination

A primary directive of AI normalization is the identification and elimination of data redundancy. By rigorously reducing duplicate data, the AI minimizes storage overhead and prevents the “update anomalies” that plague denormalized systems.

3. Data Integrity Assurance

Normalization serves as the backbone for scalable software by ensuring data integrity. The AI organizes tables and establishes foreign key relationships so that data remains consistent and accurate across the entire ecosystem, regardless of the transaction volume.

How Visual Paradigm’s AI DB Modeler Transforms the Process

Visual Paradigm has integrated these principles directly into its AI DB Modeler, transforming how developers and architects approach database design. The tool provides a seamless bridge between natural language requirements and technical implementation.

Automated Intelligence and Educational Value

One of the distinct advantages of Visual Paradigm’s approach is the inclusion of educational rationales. Unlike traditional tools that silently execute commands, the AI provides intelligent explanations for every structural change it suggests. This transparency allows users to understand the “why” behind architectural shifts—such as why a table was split or a relationship altered—serving as a powerful learning tool for best-practice design.

From Concept to Production-Ready Output

The ultimate goal of the DB Modeler AI is implementation. By the completion of the normalization step, the abstract conceptual model is transformed into a fully optimized, production-ready SQL schema. This output is not just theoretical; it is ready for immediate testing in an interactive playground or for direct implementation via exported DDL scripts. This end-to-end automation drastically reduces the time-to-market for new applications while ensuring the underlying data foundation is solid, scalable, and standardized.

This post is also available in Deutsch, Español, فارسی, Français, English, Bahasa Indonesia, 日本語, Polski, Portuguese, Ру́сский, Việt Nam, 简体中文 and 繁體中文.